Ai Agents

Don't Just Chat, Charm: Crafting Virtual Agents with Personality

Disclaimer: The following article is my own comments based on my research and has no bearing on my employer. Any reproduction of this article needs explicit permission from the author. The article discusses concepts that are rapidly changing and needs to be considered as point of time as of this release in November , 2024 Don’t Just Chat, Charm: Crafting Virtual Agents with Personality By this point you are curious and getting ready to get hands on with the hands on guide for how to develop AI agents particularly as many would like to start as a conversational agent. Reminder on some of the definitions we discussed before Chatbot: A basic program designed to simulate conversation through text or voice, often following scripted interactions. (This existed pre-generative AI) Virtual Agent: A more advanced AI that can perform specific tasks and provide support, often incorporating natural language processing and contextual understanding (This also existed pre-generative AI). Conversational AI Agent: An intelligent system capable of understanding, processing, and generating human-like dialogue across various contexts, often using machine learning to improve interactions over time. When we consider the evolution of chatbots -> virtual agents - > conversational agents , the complexity of them have progressed based on the expanded needs of the customer and also the technology advancements Before we delve into how to work with conversational agents ? lets dig into the key concepts for building a chatbot If you have interacted with a conversational system (let’s forget for a moment what category the application is) you might have seen some of these behaviors The goal now is to learn how to build this application, that can take questions in a natural language format and create actions we need. This sounds like a finite state machine. If you are aware of the concept. Definition of Finite State Machines here. But are they finite state machines if they are generative (that’s a discussion for another day)? The industry has thus far been focused on Bots, virtual agents, generative agents. But do we stop there , do we have a need for an hybrid agent that combines the best of both worlds from a deterministic flow with a generative handling. Hybrid agents will provide the guard rails we need for a rule based system. The time when Finite state machines and generative AI cross will redefine the conversational experience of users. No longer will a user be asked to interact with a specific set of menus and options, users will expect an experience that will be personalized for them based on their interests. On to the code, Here is a code lab that walks through the generation [Part I] Building the Tool https://codelabs.developers.google.com/smart-shop-agent-alloydb?hl=en#0 [Part II] Building the Agent https://codelabs.developers.google.com/smart-shop-agent-vertexai?hl=en#0 that you can walk through the setup of building an agent by yourself. I highly recommend watching this video from Patrick Marlow walking through an Agent and its conceptsWhat is a Generative AI Agent?and Workflow Agent Automation Why Conversational Agents? It’s a mature product from Google that existed over 10 years , understands the Enterprise challenges and limitations and has a path for deterministic and generative flow - Api.ai launched 2014 Google buys Api.ai in 2016 and rebrands to Dialogflow (later known as Dialogflow ES) Dialogflow CX launched in August 2020 w/ first API release and UI Dialogflow CX adds GenAI features in GA August 2023 (i.e. Generators, Datastore Agents, Generative Fallback) If you would like to do further and expand such as Evaluations on DFCX agents, NLU analysis, bot building please review https://github.com/GoogleCloudPlatform/dfcx-scrapi We learnt the concepts in building a conversational agent and the tools to build it. Next week, lets focus on Agents from integrating to a workflow perspective This post is cross posted in Medium, LinkedIn and my blog. As always please reach out to kanch@cloudrace.info for questions/thoughts/suggestions

A Typology of AI Agents

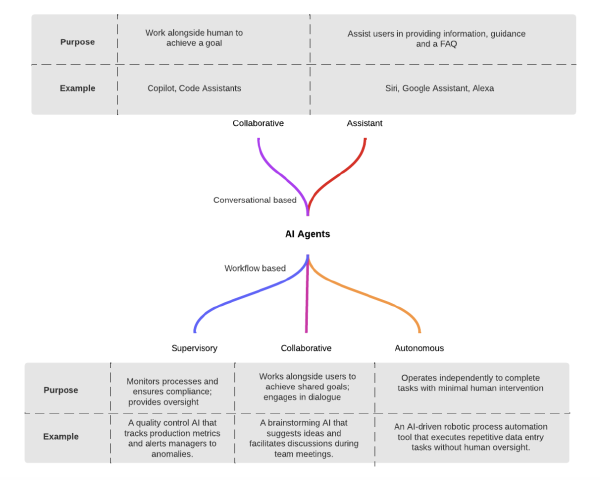

Disclaimer: The following article is my own comments based on my research and has no bearing on my employer. Any reproduction of this article needs explicit permission from the author. The article discusses concepts that are rapidly changing and needs to be considered as point of time as of this release in September , 2024 We uncovered some of the key concepts of Agents earlier (Evolution and What are Agents). In this document, we walk through several different types of AI Agents. Before we delve into the topic of agent types and their involvement, it’s essential to understand that there is no one-size-fits-all approach. Different perspectives and interpretations exist, and the following is my personal viewpoint. As Andrew Ng once wisely said, “The only way to learn is to build things.” With that in mind, let’s explore some of the agent types and their potential roles: In our current world today, we see most vendors and platforms emphasizing conversational agents as “THE” AI Agents. Every website today has a “kind” of Virtual agent or conversational AI agent. We would need to first understand what is difference between a chatbot vs virtual agent vs conversational AI agent Chatbot: A basic program designed to simulate conversation through text or voice, often following scripted interactions. (This existed pre-generative AI) Virtual Agent: A more advanced AI that can perform specific tasks and provide support, often incorporating natural language processing and contextual understanding (This also existed pre-generative AI). Conversational AI Agent: An intelligent system capable of understanding, processing, and generating human-like dialogue across various contexts, often using machine learning to improve interactions over time. Then the question comes to are conversational AI agents the only ones? The surfaces for AI agents development are evolving towards a workflow based approach where there is reasoning, planning, evaluation, execution is needed Below we differentiate the types based on surface, complexity and domain. Based on surface: In an Enterprise, we see a few types of agents based on the surface. Some of these are based on conversational just as we mentioned above. and some of these are based on workflow orchestration. We classify the agents based on the surface as below Conversational Agents (Collaborative Agents and Assistive Agents) Workflow Orchestration Agents (Supervisory, Collaborative and Autonomous) More on examples and purpose below Based on the complexity Single - When an agent performs reasoning and acting(ReAct) with its LLM (Foundation model or a Fine tuned model) with its one or more context through a RAG based data store with its one or more Tools based on OpenAPI schema (any API calls) with its session based access information with its episodic memory with its prompts that adopt a persona, clear instructions and few shot Multiple - When multiple agents are orchestrating towards a completion of a task with their observation on the other agents tasks and completion with their collaboration on orchestration of multiple agents Autonomous - When agents perform tasks that does not require intervention and can execute with their self refinement with their self learning with their scaling up and down based on the task needs Based on the domain We see a plethora of companies swarming the market with their own version of Autonomous agents to drive adoptions of their platforms. It can be considered as an evolution of a SaaS platform with more and more Agents in a marketplace. While some of these organizations have started with a chatbot as a starting point, it would be a quick turnaround to “Reason and Act” Salesforce Agents Workday Agents Adobe Sensei Hubspot Breeze Service Now AI Agent Though these are the types of agents, there are several different types based on “n” number of classifications. For now, lets focus on what are the frameworks available in the market to deploy these agents Popular frameworks available in the market to build AI agents include Langchain & Langgraph Crew AI Autogen Llama Index One Two Though there are popular frameworks, the overhead of these frameworks are starting to give a pause on widespread adoption. There are certainly adoptions that benefit from it. However, the rising concept of LLMOps/GenOps will need to be certainly evaluated for AI agents and there is certainly more to come. In our further series , we will get to do hands on how we can start building agents This post is cross posted in Medium, LinkedIn and my blog.As always please reach out to kanch@cloudrace.info for questions/thoughts/suggestions

WTH are AI Agents?

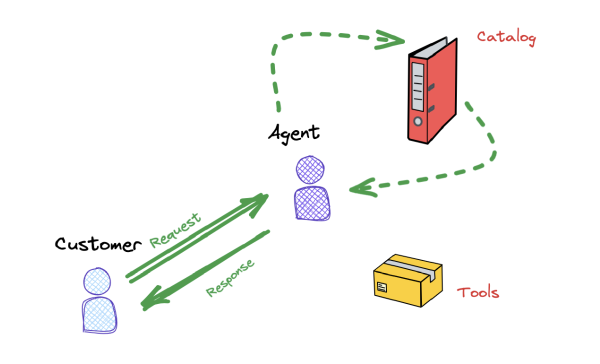

WTH are AI Agents? As a developer, you may be intrigued by the concept of AI Agents. Despite their growing popularity, the underlying idea is not novel. You may have encountered similar concepts before. The democratization of AI happens when developers have access to new tools that align with their knowledge and experience. This article aims to bridge the gap between the familiar and the unfamiliar by exploring the similarities and differences between AI Agents and concepts you might have encountered in the past. The goal is to enhance your understanding and utilization of AI Agents. “Agent” as a word Agent is not a new word. Before software engineering existed, Agents existed. Human agents such as Real estate agents, customer service agents, travel agents etc. The specialty of these agents is they understand the context of the request, they have a catalog of information, based on the input request they service the request. They leverage tools to perform their tasks depending on the role This agent received a request , they consulted the catalog but leveraged some sociocultural reasoning before they created a response. For example, Imagine the agent being in a lost and found section, Customer : “Where is my bag?” Agent: Checks the catalog does not find the bag (Reasons and leverages a tool to perform a task) Agent: Seeing the bag on shoulder. Agent will use their socio reasoning skills to respond “Are you sure it’s not in your shoulder?” Above showcases Reasoning skills, leveraging a tool and performing an action where needed Agent in a software world Let’s look at the word from software perspective, Then came software engineering concepts, we had an evolution of these agents. We had a series of agents: Network agent, monitoring agent, deployment agent. All of these were meant to orchestrate a workflow, create a consistency for repeatability or in general perform a certain task that a path is clearly defined and a sequence of actions can be defined. Well, let’s see how the Agents have evolved with AI agents. For ex., Consider writing a monitoring agent that we are going to develop (Simplistic approach) Initialize monitoring parameters and thresholds. Continuously collect data from agent logs, performance metrics, and security events. Aggregate and store data in a central repository. Perform real-time analysis of data stream: Check for anomalies, errors, or security violations. If detected, trigger alerts and take appropriate actions. Perform historical analysis of data: Identify trends, patterns, and potential issues. Generate reports and visualizations on a regular basis. Refine monitoring parameters and thresholds based on feedback. Repeat steps 2-7 continuously. For the above system, let’s write the code in Object oriented programming (hypothetical - with just declarations) import java.util.*; public class MonitoringAgent { // Member Variables private Agent agent; private DataRepository repository; private AlertingSystem alerter; private MonitoringParameters parameters; // Constructor public MonitoringAgent(Agent agentToMonitor) { // Initialization logic } // Main Monitoring Loop public void run() { // Main monitoring loop logic } // Other Methods (Placeholders) // (e.g., for parameter adjustment, historical analysis, etc.) } In the above example, Agent, DataRepository, AlertingSystem, MonitoringParameters are all classes that instantiate objects in this class MonitoringAgent. Each of these agents will have: a memory component for knowledge source or external knowledge through files a tool component that executes something else, creates something , analyzes a layer that connects between these agents where needed Agents in GenAI Now let’s come to LLM agents, very similar to what we have learnt before with a human agent or a software system built with Object Oriented Programming (OOP) . An AI agent is one that leverages reasoning skills, memory and execution skills to complete an interaction. This interaction could be a simple task, simple question, complex task Reviewing the concepts from the previous two , they all have most things in common And when we discuss AI agents , this is an instantiation of a foundation model that performs a task with its ability of corpus of knowledge its trained on and the grounded information that is available to that LLM For example, imagine creating a similar monitoring agent with LLM and leveraging the knowledge it has on certain errors, it recommends monitoring agents with recommendations in addition to the capability a regular software agent we built could have provided. Lets now walk through an example that creates an Agent using Gemini with Function calling (tool). We will explore how the agent is defined and how that performs its task using tools and knowledge . You would need a Google Cloud account to test this notebook. Instructions on how to get started here Once you get past the installations and declarations, you would find a definition of function def get_exchange_rate( currency_from: str = "USD", currency_to: str = "EUR", currency_date: str = "latest", ): """Retrieves the exchange rate between two currencies on a specified date.""" import requests response = requests.get( f"https://api.frankfurter.app/{currency_date}", params={"from": currency_from, "to": currency_to}, ) return response.json() In this function get_exchange_rate is a tool that calls api.frankfurter.app API agent = reasoning_engines.LangchainAgent( model=model, tools=[get_exchange_rate], agent_executor_kwargs={"return_intermediate_steps": True}, ) The Agent definition is done through a Langchain agent with models and tools. This example does not have a grounded information. It is still worth to have it started from here What we don’t know Smaller vs Larger - There is still debate about if a large AI agent will be needed to solve a complex problem or if smaller AI agents will focus on excelling certain tasks. Cons of LLM follow - Agents being an evolution of LLMs still has all the cons such as Hallucinations Autonomous - Though autonomous agents are starting to get the hype and we see prototypes, it’s still a challenge to create an enterprise application without Human in the loop Thanks to Hussain Chinoyfor the brainstorming and his relentlessness to make sure we don’t forget and learn from our mistakes of software engineering. If you are looking for best practices may be a good place to start would be from software development In our future series, we will cover 3: Type of Agents 4: Develop AI Agents 5: Agent Enterprise needs Do you have other topics in mind, please do suggest If you have questions/comments/suggestions, please reach out to me kanch@cloudrace.info

AI Agents 101

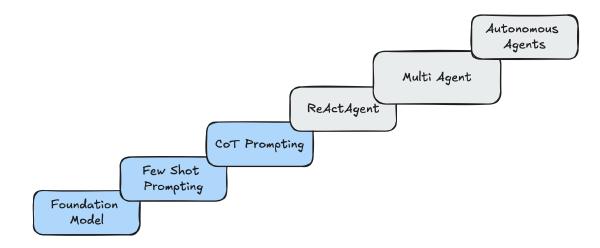

AI Agents Evolution Are you baffled by the AI buzzwords wanting to understand how generative AI application comes together trying to understand what makes sense for your org? I hope to cover a series of articles on AI agents. Let’s start from the basics. In this article, I walk you through one example of how the patterns for Generative AI applications have evolved in just a year. Disclaimer: The following article is my own comments based on my research and has no bearing on my employer. Any reproduction of this article needs explicit permission from the author. Over the past year, there has been a surge of interest in Large Language Models (LLMs) and their potential applications. As the field continues to evolve and gain momentum, it is becoming increasingly apparent that the current approaches to LLM applications are insufficient to fully harness their potential. One of the key limitations of current LLM applications is that they are primarily designed as single-purpose tools. This means that they are only able to perform a narrow range of tasks and require significant adaptation and fine-tuning for each new task. This limitation makes it difficult to scale LLM applications to a wide range of real-world problems and scenarios. To address this limitation, there is a growing need for a new type of LLM architecture that is capable of supporting a wide range of tasks and applications without the need for extensive adaptation. This new architecture, known as Agentic architecture, takes inspiration from the concept of agents. We will go over this in a more detail topic later An agent is an entity that is capable of perceiving its environment, taking actions, and learning from its experiences. Agentic architecture applies this concept to LLM applications by providing them with a set of core capabilities that enable them to adapt to different tasks and environments. These capabilities include: Reasoning: The ability to understand and interpret the world around them, including natural language, images, and other forms of data. Action: The ability to take actions within their environment, such as generating text, answering questions, and controlling physical devices through the use of tools. By incorporating these capabilities into LLM applications, Agentic architecture enables them to become more versatile and adaptable. This allows them to be applied to a wider range of tasks and problems, from customer service chatbots to autonomous vehicles. As the field of LLM applications continues to evolve, it is likely that Agentic architecture will become increasingly important. This new architecture has the potential to unlock the full potential of LLMs and revolutionize the way we interact with technology. While the example showcased here emphasizes the conversational nature of LLMs, their potential impact extends far beyond mere conversational interactions. LLMs are poised to revolutionize multiple facets of our daily lives. Their capacity to comprehend and produce natural language, combined with the potential for integration with other technologies, unlocks a world of opportunities for enhancing efficiency, personalization, accessibility, and overall quality of life. These examples aim to provide insight into the architecture of LLMs and how they can adapt to diverse needs and requirements. About “Gemini Getaways” Imagine you have a fictional travel agency “Gemini Getaways” looking to adopt “Generative AI” to your travel planning for your customers. Assumptions on what exists today: Have a database of itineraries with flights, accommodations, sightseeing recommendations, preferences, budgets, key events etc., For flights current information on availability dependent on an external API For personalized recommendations, the travel agency maintains customer profile information with their preferences such as stops , duration, pet friendly, family friendly etc., Evolution of Agents: Foundation Model Call : If you were to create an application that answers for Plan a 3 day itinerary to Paris **Action taken: ** Based on “Transformer” research from Google which is the backbone of LLM Tokenization - question is converted to tokens that are words, subwords, characters Embedding - tokens are converted to vectors (machine understandable) that is semantically and contextually aligned based on the foundation model knowledge source Encoder + Decoder approach - The embedding is then fed to components that predicts the next token based on what it knows. More on foundation models here Few Shot Prompting If you were to create an application that answers for “Plan a 3 day trip itinerary to Paris” and you have added two samples such as “Plan a 3 day trip itinerary to Rome” and “Plan a 3 day trip itinerary to Tokyo” with the answers focused on art museums. Action taken: This is considered a few shot prompting , the approach similar as above but adds more with influences the LLM’s response generation by providing context and examples, leading to more focused, informative, and well-structured answers. Through a few shot tuning you are guiding the foundation model in the template of the outputs and some of the reasoning in this case may be art museums. More on few shot prompting here Chain of Thought Prompting If you were to create an application that answers for A flight departs San Francisco at 11:00 AM PST and arrives in Chicago at 4:00 PM CST. The connecting flight to New York leaves at 5:30 PM CST. Is there enough time to make the connection Action taken: For the above question, though the approach would be similar as before. However the question needs in depth reasoning skills to derive the answer in addition to the knowledge of the foundation model. It is not just knowing the answer but knowing how to get to the answer This approach above was solved through “Chain of Thought Prompting” paper Likely the steps will be to calculate the time zone conversion, layover time calculation, minimum connection time consideration and then calculating for the final result This chain of thought prompting involves “reasoning” skills with “acting” skills to identify the course of action to take. However the reasoning is limited to the foundation model knowledge. They are very apt for mathematical reasoning and common sense reasoning. More on Chain of thought prompting is here ReAct Agent If you were to create an application that answers for Book me a flight that leaves Boston to Paris and make itinerary >arrangements for art museums Action taken: “ReAct Based Agent” - In this research paper by Google, the concept of an Agentic approach with “Reasoning” and “Acting” is introduced, utilizing Large Language Models (LLMs). This approach aims to move forward towards human-aligned task-solving trajectories, enhancing interpretability, diagnosability, and controllability. Agents, in general, comprise a “core” component consisting of a LLM Foundational Model, Instructions, Memory, and Grounding knowledge. To interact with external systems or APIs, specialized agents are often required. These agents serve as intermediaries, receiving instructions from the LLM and executing specific actions. They may be referred to as function-calling agents, extensions, or plugins. We will discuss more about what is an agent and types of agents in a future blog in this series In the case of booking a flight, the agent would leverage an API call to a booking API to check availability, fares, and make reservations. Additionally, it would utilize a knowledge source containing information about art museums to provide relevant itineraries and recommendations. Multi Agent If you were to create an application that answers to Book me hotel and flights in New York city that is pet friendly and no smoking Action taken: The example provided showcases a scenario where multiple ReAct agents are chained together. Unlike in previous examples, these agents do not require orchestration; instead, they announce their availability and capabilities through self-declaration. This approach enables seamless collaboration among the agents, allowing them to collectively tackle complex tasks and deliver enhanced user experiences. By combining multiple agents, tools, and knowledge sources, AI systems can achieve remarkable capabilities. They can handle intricate tasks, provide personalized experiences tailored to individual users, and engage in not only natural and informative conversations but also key aspects of a business’s workflow. This integration of various components allows AI systems to become indispensable partners in various domains, offering valuable assistance and automating repetitive or time-consuming tasks. Overall, the combination of multiple agents, tools, and knowledge sources empowers AI systems to handle complex tasks, deliver personalized experiences, and engage users in a comprehensive and meaningful way. As AI continues to evolve, we can expect even more innovative and groundbreaking applications of this technology, transforming industries and enhancing our daily lives. Autonomous Agent If you were to create an application that answers for Book me hotel and flights in New york city that is pet friendly, no smoking and that has availability in both my and friends calendar Indeed, the path to creating effective Agentic AI systems requires more than just reasoning, acting, or collaboration. It also demands the ability to engage in self-refinement and participate in debates to determine the most optimal outcome. The examples we have explored demonstrate that while many aspects of Agentic AI can be implemented at a production level today, there are still key areas that require further refinement to achieve true production-level quality. In the specific example of booking a meeting, we need to combine the actions of booking, incorporate reasoning across multiple filters and bookings, and facilitate collaboration among multiple agents, all while debating the best date for all parties involved. This process requires the ability to self-refine and adapt based on feedback and changing circumstances. In conclusion, through these seven examples, we have embarked on a journey that showcases how LLM-based architectures are evolving into Agentic AI workflows, which holds the potential to revolutionize our approach to building for the future. We have witnessed the transformation from a simple foundation model to an autonomous agent, unfolding before our eyes as we explore the evolution of an entire industry at our fingertips. This is going to be pivotal for any industry we are aligned with If you are ready to experiment with Agents , this series will cover some hands on code you can work with. In our future series, we will cover some topics and some example to follow through 2: Agent architectures a new thing? 3: Type of Agents 4: Develop AI Agents 5: Agent Enterprise needs Do you have other topics in mind, please do suggest If you have questions/comments/suggestions, please reach out to me kanch@cloudrace.info